Asking & Listening: The Problem with Qualitative Data … and what to do about it

When it comes to quantitative data (i.e., number data), we’ve come up with a lot of “scientific” standards for assessing quality of the data and how confident we should feel about the conclusions we draw from that data. At its most basic level, that’s things like having a sufficiently large sample size (i.e., a minimum of 30, but more is always better).

When it comes to qualitative data (i.e., word data), the standards aren’t as clear and they don’t seem as scientific. Part of the problem is that our words are part of living languages. Living things grow and change over time. That leads to assumptions and misunderstandings – and these can make us think that word data isn’t as trustworthy as number data.

In this post, I debunk that myth and show how the real problem with qualitative data stems from getting an answer without getting the meaning.

Getting an Answer Isn’t Enough

When we have questions, it’s nice to get an “answer” – something that makes us feel like we’ve got some idea of what’s going on and that our situations are less uncertain.

Unfortunately, getting an answer isn’t enough.

This is true whether you’re working with quantitative or qualitative data. On a spreadsheet, that might mean checking your calculation to make sure that your formula is correct and that it’s pulling from the correct data. With word data, this means that you usually have to press a little harder to make sure you understand what people mean by what they say.

Ambiguity in Word Data

In another post, I discussed that the word “bi-weekly” has multiple dictionary definitions, which has implications for how carefully you might need to specify schedule-related terminology in your SOPs (e.g., a bi-weekly task might need to be performed 2x/month or 8x/month depending on which definition you use).

But that kind of obvious ambiguity isn’t the only kind of ambiguity or lack of clarity that can cause issues with understanding the data. Among others:

- Portmanteaus – words that we create by combining parts of other words, like “spork” (spoon + fork) or “turducken” (turkey + duck + chicken).

- There’s an order to a turducken: a chicken stuffed into a duck stuffed into a turkey. If you know, you know.

- But what about a spork – a combination of a spoon and a fork? That could mean a utensil that has fork tines on one side and a shallow bowl on the other, or it could mean a utensil that has the shallow bowl and tines combined at the end of a single utensil handle. That means that the version of the spork you automatically picture in your head might be “wrong” when compared to another person’s picture of a spork.

- Familect – (family + dialect) words that you make up and use within your own family and only you really know the meaning (or joke) behind them. Outside of this circle of people-in-the-know, people may make assumptions about what those words mean or it might sound like gibberish.

This is just one reason that people saying things in their own words doesn’t necessarily give you as much information as you’d think.

A Real-life Example of an Answer That’s Not an Answer

At a recent conference, I had the opportunity to hear a fellow audience member dismiss the validity of forums, interviews, and other sources of qualitative data – based on his own experience of asking his customers what they want.

In his example, he had asked his customers about their food preferences so that he might cater better to those preferences. Their answer: “healthier choices.”

He proceeded to stock up on salad choices in addition to his usual offerings.

The problem? The salads didn’t sell, but “unhealthy” options like donuts continued to sell well.

His conclusion? That the data was bogus, and that you can’t trust what people say.

There are certainly a lot of good reasons to buy into his interpretation/conclusion – biases, a desire to fit in with a group, wishful thinking, and false perceptions are all known problems with self-reported data.

We can’t be sure, because, as it turns out, the word data collected was too superficial to be useful. He had collected an “answer” – that is, a non-blank response to a question – but not enough data to interpret that data and turn it into information that can be used to explain things and make decisions. He needed better context and/or follow-up. (I give a concrete example of what that might look like at the bottom of the next section.)

Ask for the Meaning Behind the Words

Before we get further, let’s agree that it’s important that you start from a place where you acknowledge the ambiguity of word data, and the problems that come along with self-reported data (e.g., biases and wishful thinking). What does this agreement mean for data interpretation, meaning-making, and decision-making?

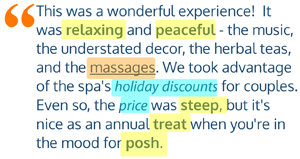

When you’re working from customer reviews, you’re often “stuck” with what people have written, with no chance for further investigation. Data interpretation is more reliant on things like word / concept / theme frequency and any context you might be able to lend to that review. If you’re looking for jargon, that usually means methods like sentiment or thematic analysis; frequency distributions alone won’t let you know how “important” a data point is in the dataset.

When you’re crafting your own feedback forms for events or otherwise conducting survey research, you have a chance to get ahead of the potential problems by asking better questions – and offer chances for follow-up that provide more meaning.

If you’re asking an open-ended question, your follow-up question will tend to be yet another open-ended question that does something like ask for an example. This example is what gives you the context you need to interpret what was meant by the first answer.

With a single-select question, your follow-up question is a chance to ask why they chose that answer and provide more information. Again, this follow-up question is an open-ended question.

If you already know that you’re looking for more specific data (e.g., more information on “healthier choices”), your open-ended questions and single-select questions can be more targeted (e.g., “What do you mean when you say something is a healthier choice? Give an example.”) You can also mix in a bit of an attitude/preference question like a true/false question such as, “I like to shop / eat at places that have healthy options available.” Attitudes like that can indicate that people like the idea of going to places that offer healthy choices (or other places that make them feel good about themselves) even if they don’t want to eat differently or otherwise change their own behaviors.

Want help with your data?

Have you dismissed the value of data in the past because it seemed misleading or hard to work with?

Want help collecting data that you can turn into meaningful information? Let’s connect and talk about what questions you have, what data might be appropriate and possible to collect, and how it could be analyzed to explain things and make informed decisions.